Understanding the Differences Between Generative and Discriminative Models in Deep Learning

deep learning involves the use of generative and discriminative models, each with its own unique characteristics and applications. Understanding the distinctions between these two types of models is essential for effectively leveraging them in various machine learning tasks.

Introduction

In the realm of deep learning, understanding the fundamental differences between generative and discriminative models is crucial. These two types of models play a significant role in various machine learning tasks, each offering unique capabilities and applications.

Overview of Generative and Discriminative Models

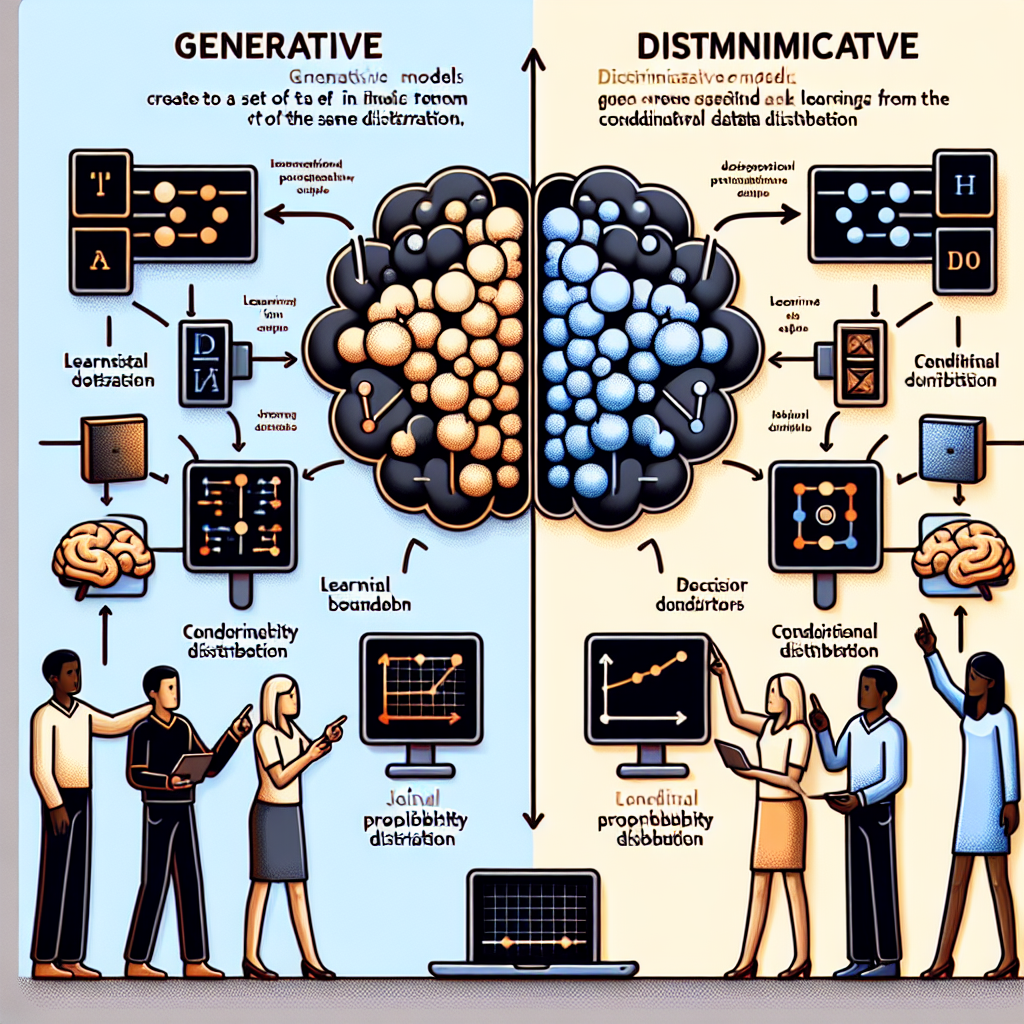

generative models are designed to understand and mimic the underlying data distribution, enabling them to generate new data points that are similar to the training data. These models focus on capturing the joint probability distribution of the input features and the output labels.

On the other hand, discriminative models concentrate on learning the boundary between different classes in the data. Instead of modeling the entire data distribution, discriminative models directly estimate the conditional probability of the output given the input.

Generative models are commonly used in tasks such as image generation, text generation, and anomaly detection. They are versatile in generating new samples that resemble the training data, making them valuable in creative applications.

Discriminative models, on the other hand, excel in classification tasks where the goal is to accurately predict the class label of a given input. These models are widely used in tasks such as sentiment analysis, object recognition, and natural language processing.

By understanding the nuances of generative and discriminative models, machine learning practitioners can effectively choose the right model for a specific task and optimize their performance. Both types of models have their strengths and weaknesses, and selecting the appropriate model can significantly Impact the success of a machine learning project.

Generative Models

Definition and Characteristics

Generative models are a class of models in deep learning that are designed to understand and mimic the underlying data distribution. These models are capable of generating new data points that are similar to the training data they have been exposed to. By capturing the joint probability distribution of the input features and the output labels, generative models can create new samples that closely resemble the original data.

One key characteristic of generative models is their ability to generate data, making them valuable in tasks such as image generation, text generation, and anomaly detection. These models are versatile and can be used in a wide range of creative applications where the generation of new samples is required.

Applications in Image Generation

Generative models have found significant applications in the field of image generation. By understanding the underlying data distribution of a set of images, these models can generate new images that are visually similar to the training data. This capability has been leveraged in tasks such as creating realistic images from scratch, enhancing image quality, and even generating artistic images based on specific styles or themes.

One popular application of generative models in image generation is the creation of deepfake images, where the model can generate realistic images of individuals based on existing images. This technology has raised ethical concerns but also showcases the power of generative models in creating realistic visual content.

Popular Algorithms

There are several popular algorithms used in the implementation of generative models. One widely known algorithm is the Generative Adversarial Network (GAN), which consists of two neural networks – a generator and a discriminator – that are trained simultaneously. The generator creates new samples, while the discriminator evaluates the authenticity of these samples, leading to the generation of increasingly realistic data.

Another popular algorithm is the Variational Autoencoder (VAE), which is a type of neural network that learns to encode and decode data. By capturing the underlying distribution of the input data, VAEs can generate new samples that closely resemble the original data while also enabling the generation of new data points by sampling from the learned distribution.

Discriminative Models

Definition and Key Features

Discriminative models are a category of models in deep learning that focus on learning the boundary between different classes in the data. Unlike generative models that aim to understand the underlying data distribution, discriminative models directly estimate the conditional probability of the output given the input.

One key feature of discriminative models is their ability to excel in classification tasks where the goal is to accurately predict the class label of a given input. These models are widely used in various applications such as sentiment analysis, object recognition, and natural language processing.

Use Cases in Classification Tasks

Discriminative models have been extensively utilized in classification tasks across different domains. One common application is sentiment analysis, where these models are employed to classify text data based on the sentiment expressed. By learning the patterns in the input data, discriminative models can accurately determine the sentiment of a given text.

Another significant use case of discriminative models is in object recognition, particularly in computer vision tasks. These models can identify and classify objects within images, enabling applications such as autonomous driving, facial recognition, and image tagging.

In the field of natural language processing, discriminative models play a crucial role in tasks such as text classification, named entity recognition, and machine translation. By focusing on the discriminative aspects of the data, these models can effectively process and analyze textual information for various applications.

Commonly Used Algorithms

There are several popular algorithms that are commonly used in the implementation of discriminative models. One widely known algorithm is logistic regression, which is a linear model used for binary classification tasks. Logistic regression estimates the probability of a certain class based on the input features.

Another commonly used algorithm is the Support Vector Machine (SVM), which is a powerful tool for both binary and multiclass classification. SVM works by finding the optimal hyperplane that separates different classes in the feature space, maximizing the margin between classes.

Deep learning frameworks also offer various neural network architectures for discriminative modeling, such as convolutional neural networks (CNNs) for image classification and recurrent neural networks (RNNs) for sequential data analysis. These architectures have been instrumental in achieving state-of-the-art performance in classification tasks.

Comparison Between Generative and Discriminative Models

Performance Metrics

When comparing generative and discriminative models, one crucial aspect to consider is their performance metrics. Generative models are typically evaluated based on their ability to accurately generate new data points that closely resemble the training data distribution. Metrics such as log-likelihood, perplexity, and inception score are commonly used to assess the quality of generated samples.

On the other hand, discriminative models are often evaluated based on their classification accuracy and precision in predicting the correct class labels for input data. Metrics such as accuracy, precision, recall, and f1 score are commonly used to measure the performance of discriminative models in classification tasks.

It is essential to consider the specific task requirements and objectives when selecting the appropriate performance metrics for generative and discriminative models. Understanding the strengths and limitations of each type of model can help in choosing the most suitable evaluation criteria for a given machine learning task.

Training Process

The training process for generative and discriminative models differs significantly due to their distinct objectives and underlying principles. Generative models, such as Generative Adversarial Networks (gans) and Variational autoencoders (VAEs), typically involve training a generator network to produce realistic samples and a discriminator network to distinguish between real and generated data.

Generative models often require complex training procedures, such as adversarial training and variational inference, to learn the underlying data distribution effectively. The training of generative models can be challenging due to issues such as mode collapse, where the generator produces limited diversity in generated samples.

On the other hand, discriminative models, such as logistic regression and Support Vector Machines (SVMs), focus on learning the decision boundary between different classes in the data. The training process for discriminative models involves optimizing the model parameters to minimize classification errors and maximize predictive accuracy.

Discriminative models typically require less complex training procedures compared to generative models, making them easier to train and deploy in practical applications. The training of discriminative models is often more straightforward and less prone to convergence issues compared to generative models.

Real-world Applications

Generative and discriminative models have distinct applications in real-world scenarios, each offering unique capabilities and advantages in different domains. Generative models are commonly used in tasks such as image generation, text generation, and anomaly detection, where the goal is to generate new samples that resemble the training data distribution.

Generative models have been applied in creative fields such as art generation, where they can produce visually appealing images and text based on learned patterns in the data. These models are also used in generating synthetic data for training machine learning models and in detecting anomalies or outliers in datasets.

On the other hand, discriminative models find extensive applications in classification tasks such as sentiment analysis, object recognition, and natural language processing. These models excel in accurately predicting class labels for input data, making them valuable in tasks that require precise classification and decision-making.

Discriminative models are widely used in practical applications such as spam detection in emails, image classification in medical imaging, and sentiment analysis in social media data. These models play a crucial role in automating decision-making processes and extracting valuable insights from large volumes of data.

Challenges in Using Generative and Discriminative Models

When working with generative and discriminative models in deep learning, there are several challenges that practitioners may encounter. These challenges can impact the performance and effectiveness of the models in various machine learning tasks. Understanding and addressing these challenges is essential for achieving optimal results in model deployment and application.

Overfitting Issues

One common challenge faced when using generative and discriminative models is the risk of overfitting. Overfitting occurs when a model learns the training data too well, capturing noise and irrelevant patterns that do not generalize to new, unseen data. Generative models, in particular, can be prone to overfitting due to their focus on capturing the entire data distribution.

To mitigate overfitting, practitioners can employ techniques such as regularization, dropout, and early stopping during the training process. These methods help prevent the model from memorizing the training data and instead encourage it to learn generalizable patterns that can be applied to new data instances.

Data Efficiency Concerns

Another challenge that arises when working with generative and discriminative models is data efficiency. Generative models, which aim to understand the underlying data distribution, often require a large amount of training data to learn the complex patterns present in the data. Insufficient data can lead to poor model performance and limited generalization capabilities.

Practitioners can address data efficiency concerns by utilizing techniques such as data augmentation, transfer learning, and synthetic data generation. These methods help enhance the model’s ability to learn from limited data instances and improve its performance on unseen data samples.

Interpretability Challenges

interpretability is a crucial aspect when working with generative and discriminative models, as understanding how the models make predictions is essential for gaining insights and building trust in the model’s decisions. Generative models, such as GANs and VAEs, can be complex and challenging to interpret due to their intricate architecture and training process.

To address interpretability challenges, practitioners can utilize techniques such as model explainability, feature visualization, and attention mechanisms. These methods help shed light on the model’s decision-making process and provide insights into how the model generates new samples or classifies input data.

Understanding the differences between generative and discriminative models in deep learning is essential for effectively leveraging them in various machine learning tasks. Generative models focus on mimicking data distribution and are valuable in tasks like image and text generation, while discriminative models excel in classification tasks. By choosing the right model for a specific task and optimizing their performance, machine learning practitioners can significantly impact the success of a project. Both generative and discriminative models have their strengths and weaknesses, and selecting the appropriate model is crucial for achieving optimal results in model deployment and application.

Comments